IBM: Watsonx for Support

As design lead, I helped shape watsonx for IBM technical support from discovery through prototyping, introducing intelligent workflows and conversational assistants that reduced repetitive tasks and surfaced critical context. Built on IBM’s AI platform, watsonx, this work redefined how global support leverages AI, accelerating case resolution and setting a new standard for scaling AI-driven experiences across IBM.

If you would like to know more, I'm happy to discuss more details! Just shoot me a message.

To honor my NDA, I’ve kept this case study free of confidential info. Designs are my own recreations using public design systems, and don’t reflect IBM’s views.

Role

Design Lead

Team

IBM, CIO Design

Timeline

2022 - 2025

Disciplines

UX/UI Design, Service Design, Product Design, UX Research

Overview

IBM’s global support organization handles millions of complex cases each year. Historically, it followed a unified strategic vision to streamline support across hardware, software, and services. By late 2023, however, it was clear this framework needed to evolve. It has been a multiyear effort to builds on existing foundations to augment support with AI, driven by the need to stay ahead of rising complexity and customer expectations.

With the rise of generative AI and conversational tooling, there was an opportunity to reimagine support - not just for clients, but for the support engineers who power it. Our goal was to define a new vision and roadmap that would bring AI-powered assistance to life, using watsonx as the foundation.

My Role

As a design lead on the Cognitive Support Platform (CSP), I co-led a series of design thinking workshops between Fall 2023 and Spring 2024 to explore how watsonx and AI could be meaningfully applied to IBM’s internal support experience. These workshops were conducted in collaboration with two senior service designers, one product managers, and two IT architects, and involved 30+ participants across multiple business units.

By Fall/Winter 2024, I prototyped a new AI-powered chatbot experience, IBM Support Coach, that supported the first iteration of this updated vision. I partnered closely with platform teams to evaluate feasibility and implementation.

Research Methodologies

This series of design workshops with 30+ stakeholders helped align teams around shared problems worth solving and served as the catalyst for the updated vision and roadmap that followed. Our goal was to move beyond abstract ideas and identify real, high-friction moments in the support engineer and client experience that could be transformed through watsonx.

We used design thinking exercises, journey mapping, AI opportunity spotting, and group prioritization techniques to identify high-impact opportunities for AI across the support experience.

Blurred screenshot from our design workshop, preserving privacy while capturing how we mapped pain points and AI opportunities.

We began with a fundamental question:

How can we apply watsonx to real internal use cases at IBM, so we can learn, improve, and set the direction before launching it externally?

To explore this, I co-led a design thinking workshop focused on surfacing concrete, high-impact opportunities for AI within IBM Support. Together with 30+ stakeholders from design, product, engineering, and operations over the course of 15 hours in one week, we mapped pain points, current workflows, and potential intervention points for AI across both the support engineer and client experience.

Early Insights

From the design thinking workshop, several patterns emerged across teams. Despite differences in role or region, many participants described similar friction points in their day-to-day experience.

🔎 Friction Point

💡 AI Opportunity

Manual, repetitive tasks eat up time

→

Auto-generate summaries, logs, responses

Fragmented systems & context loss

→

Surface case history/context dynamically

Clients expect proactive, tailored help

→

Personalize communications & proactive support

Documentation is outdated or missing

→

Use LLMs to generate new knowledge articles post-resolution

The Discovery

Our workshop revealed fragmentation: clients bouncing between inconsistent entry points, support engineers buried in redundant tasks, and teams unaware of overlapping tools.

Across roles, a shared vision emerged: a unified, AI-powered support experience that could guide users from first contact to resolution.

Key opportunity areas included:

- A universal support chatbot embedded across IBM channels

- Modern, context-aware client identification

- A single, AI-enhanced interface to unify support tools

- Better coordination between support, sales, and product teams

watsonx wasn’t just about automation—it was the connective layer to simplify support at scale. From that insight, we shifted from designing features to designing AI-powered flows that adapt to the user, not the other way around.

Translating insights into an AI-native support strategy

Before jumping into solutions, we needed to zoom out and redefine what intelligent support should actually feel like. The existing vision statement had scaled IBM Support for years, but it wasn’t built with AI in mind.

Building on the insights from Phase 1, I partnered with service design and product leads to co-lead a virtual design workshop from December 2023 to January 2024, aimed at rethinking the long-term direction. This session brought together a small, cross-functional group to craft a new vision statement and roadmap that teams across IBM could align around.

Together, we mapped what a smarter, more adaptive support journey could look like from the first client interaction to final resolution. This reframing became the foundation for a more connected, AI-native strategy across IBM Support.

Designing the AI-Augmented Journey

As part of the session, we developed a vision storyboard that followed two core personas through their support journeys: one support engineer and one client. We outlined key moments where AI could step in from log analysis and case summarization to proactive updates and task automation. These storyboards helped translate abstract strategy into tangible, human-centered experiences and gave teams a shared narrative to design and build from.

Blurred screenshot from our design workshop, preserving privacy while capturing how we mapped pain points and AI opportunities.

Explorations in AI-Augmented Design

With journey maps and hill statements defined, the next challenge was bringing those ideas to life in the interface. But before jumping into detailed wireframes, we took a step back to explore a fundamental question: Where should AI-powered support live inside the experience?

Rather than assuming a single format, we explored multiple ways AI could show up in the interface:

- As a conversational assistant embedded in the support engineer workspace & client portal

- As an browser plugin that enhances existing UI components

- As context-aware overlays or prompts that surface at the right time

Each approach came with tradeoffs. The conversational assistant was the most flexible but required complex integration across multiple platforms and design systems. The plugin was lightweight but lacked persistent context, while the prompts were the hardest to implement technically due to their need for real-time awareness. We came to the realization that there are two main uses of AI assistance: contextual within the record a user is looking at, and also to know generic information & questions.

I created a series of wireframes to explore how AI could reduce friction, surface insight, and give support engineers time back without disrupting their flow. This early feature-format exploration helped shape how AI would be experienced: not just as a tool, but as a "coach". Accordingly, it was decided to focus first on a conversational assistant and context-aware overlays in order to support the interface.

The following wireframes were created and iterated in collaboration with product and engineering partners.

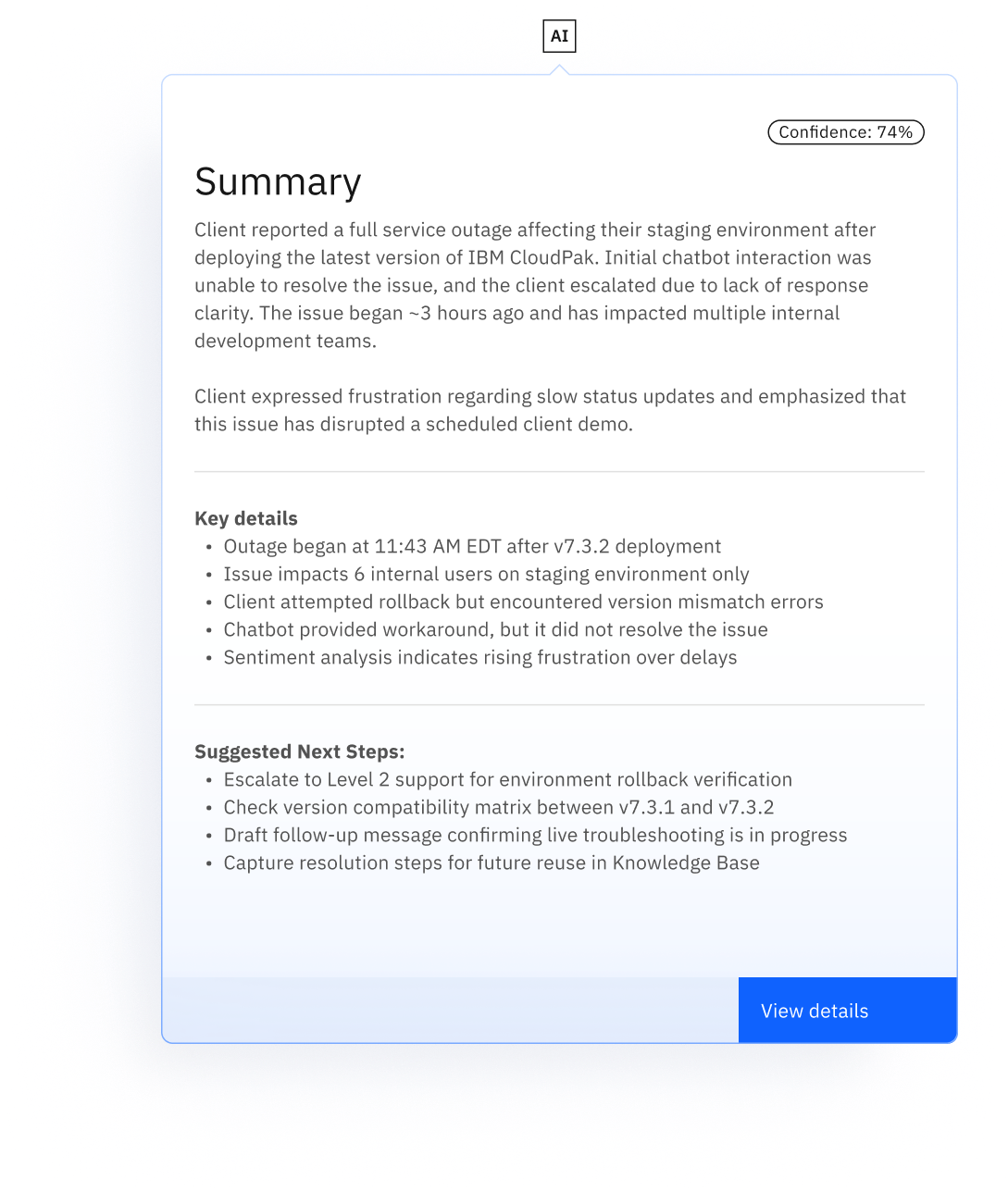

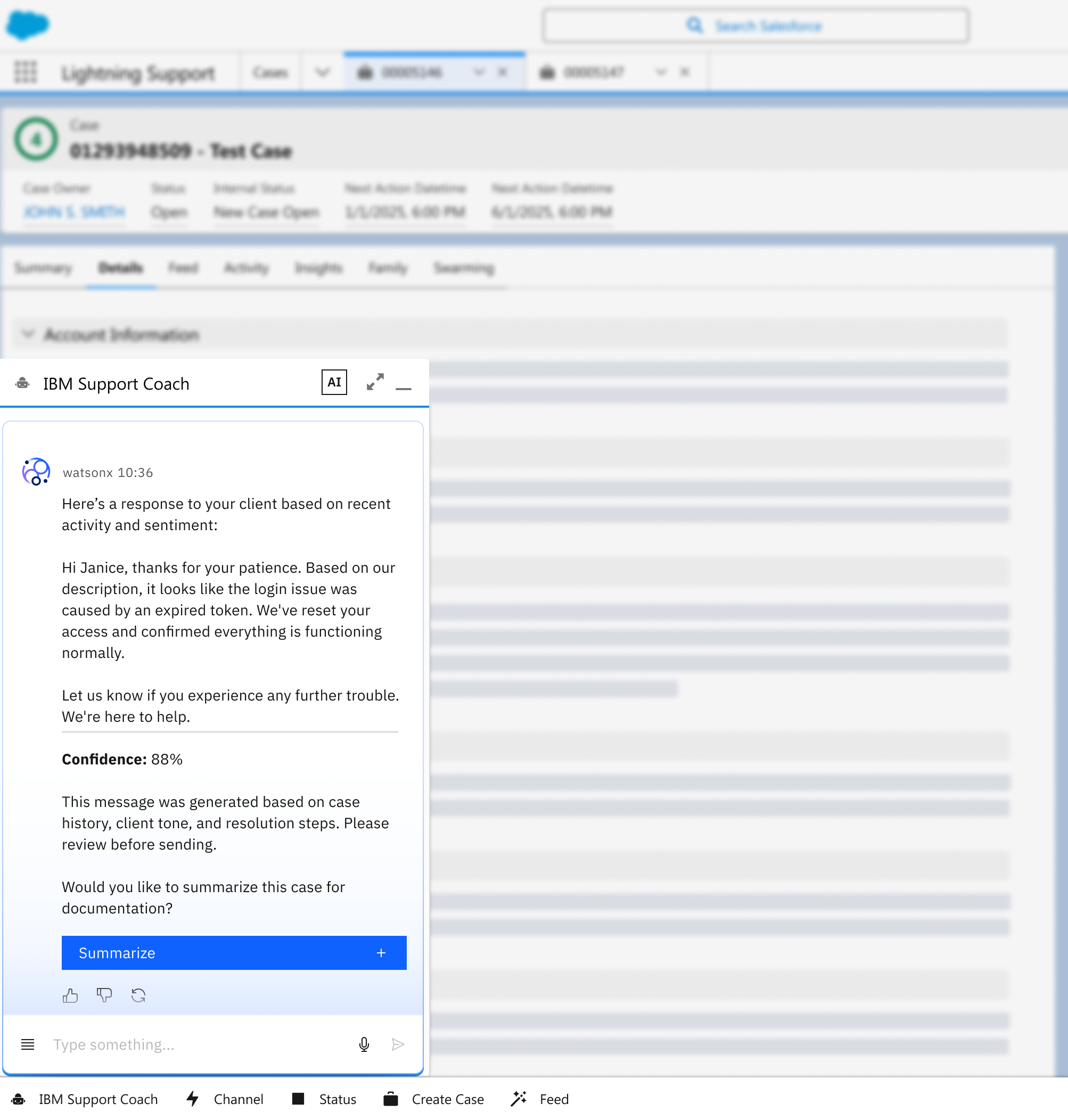

Smart Summaries

Less scrolling, more solving.

The first thing a support engineer sees should already reduce effort.

I designed a case summary panel powered by AI to highlight key case details and confidence level at a glance, following IBM's Carbon Design System.

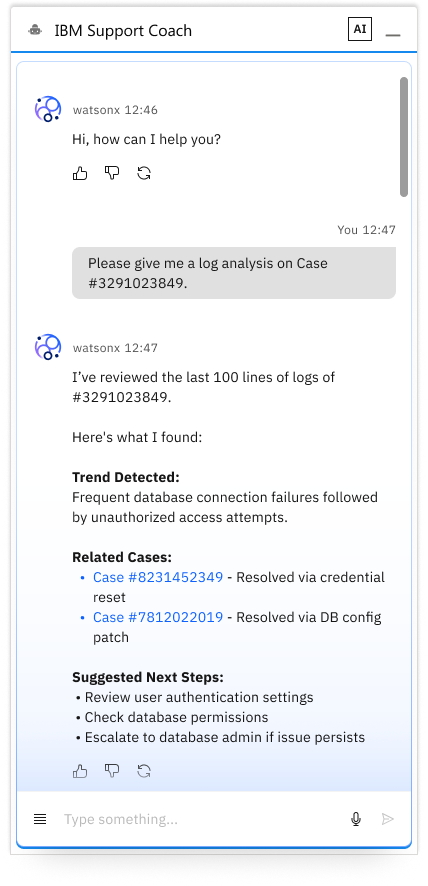

Built-In Log Analysis

AI that reads between the lines.

Log reviews often slow support engineers down. This concept integrates AI-powered analysis to identify trends, surface relevant past cases, and suggest next steps within the same screen.

Assistive Writing Tools

Help when it’s helpful.

From client responses to internal wrap-ups, I explored how support engineers could use AI to generate clear drafts.

The following design also explores the idea of having the conversational assistant on the bottom utility bar of the screen while looking at a case.

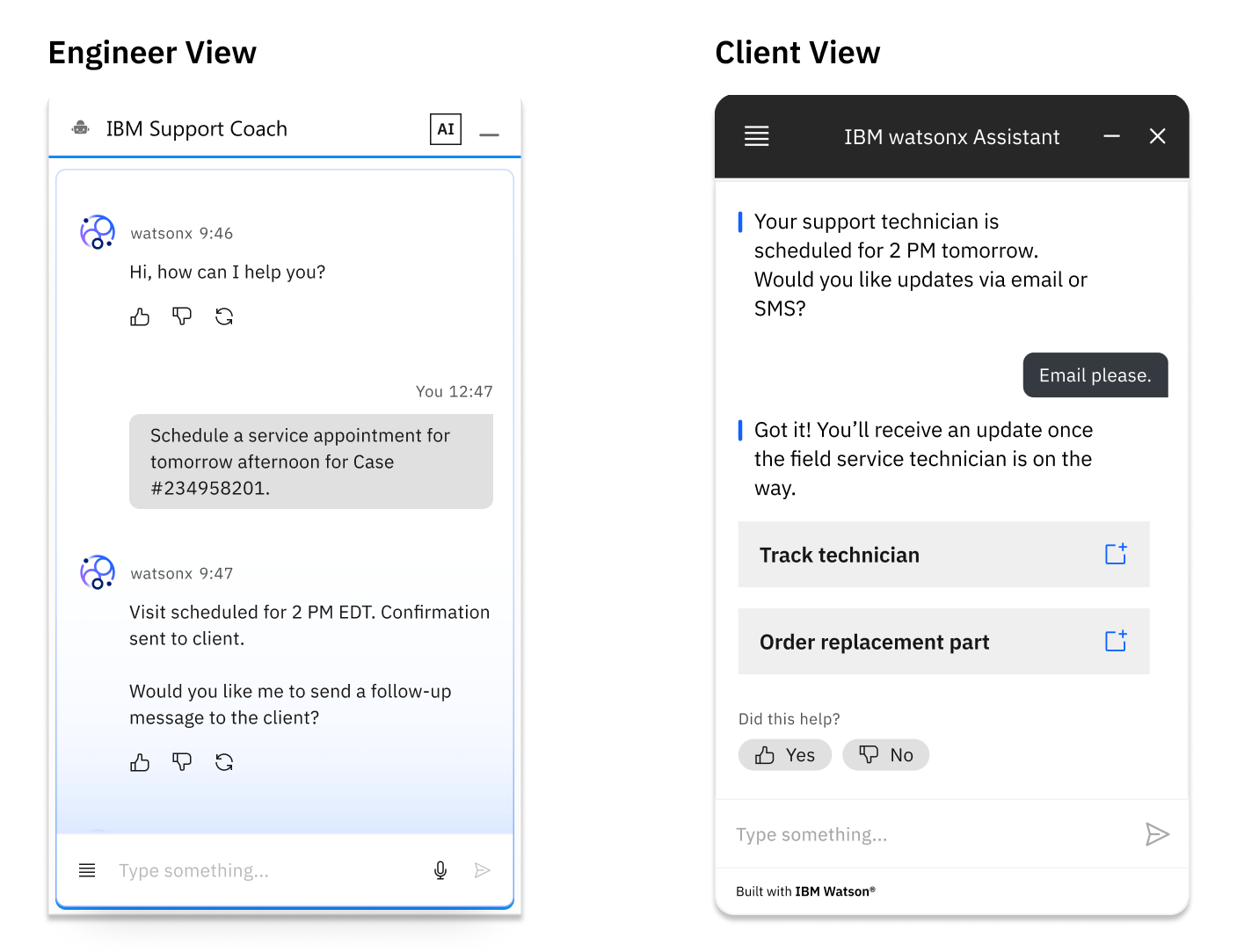

Conversational Assistant

Useful for both engineer + client.

Inspired by the journey storyboard, I prototyped an in-platform assistant that handles actions like ordering parts, scheduling support, or escalating cases without the support engineer needing to switch tools.

In parallel, I also explored how a conversational assistant might support clients by answering questions, surfacing relevant updates, and guiding them through resolution steps in a more natural, contextual way.

Note that they follow different design systems due to technically being on different platforms: the internal engineer view is built on Salesforce Lightning Design System, the client view is built on IBM's Carbon Design System, yet both are powered by IBM watsonx.

Designing for Implementation: Turning Vision into Scalable Systems

With foundational concepts and interface prototypes in place, the next step was translating design intent into a roadmap that could work across IBM’s layered platforms and global teams. The Cognitive Support Platform spans multiple design systems (Salesforce Lightning for internal agents, IBM Carbon for clients) and must integrate with tools like CSP, Support Insights, watsonx APIs, and several others in the ecosystem.

To ensure implementation was grounded in reality and not just vision, we considered the following:

- Building a framework of assets & guidelines on how the AI triggers

- Designing and exploring patterns that could be reused across different case types and teams

- Ensuring consistency in tone, visual clarity, and accessibility across Carbon and Lightning systems

- Collaborating with engineering to define data requirements and conversational mode architect.

The key to this phase was identifying “low-lift, high-impact” entry points. The overall roadmap had us starting first with the support engineer capabilities, starting with AI summaries and action-triggering assistants while planning toward more advanced integrations like log analysis and personalized guidance, and eventually the client experience.

Impact

The new vision, assistant framework, and experience patterns I designed contributed to a broader shift across IBM’s Cognitive Support Platform. Since these efforts began, AI-driven tools built on watsonx.ai and watsonx Orchestrate have already demonstrated massive time savings for IBM's support teams.

These outcomes point to the scale of what’s possible. My work lay the foundation for a large transformation on how IBM delivers intelligent support at scale: what was once a fragmented experience into one that’s increasingly proactive, contextual, and AI-powered.

The foundation is in place. Intelligent support at IBM is no longer a concept - it’s in motion.

17,000+ hours

hours saved annually from AI-generated answers to low-complexity cases

31 minutes

saved per case through log analysis and anomaly detection

56,000+ hours

saved monthly by automating repetitive support workflows